Blog

What is Kubernetes?

Kubernetes is an open-source platform that helps to automate containerized workloads and services. It allows us to manage containers, scale deployments and deploy applications across a cluster of hosts.

Kubernetes was originally developed by Google. Google contributed a lot to Linux container technology. Google used Borg for handling all the internal operations for years. Borg used to handle nearly 2-3 billion of deployments in a week. The experience of Borg helped the engineers at Google to create Kubernetes with such clarity.

Kubernetes is a successor to Google Borg, that’s why a lot of influence is seen on Kubernetes. Google open-sourced Kubernetes in 2014 and now Kubernetes is managed by CNCF (Cloud Native Computing Foundation), which itself is under Linux Foundation. Before getting a hold of Kubernetes, let’s understand some basic terminologies:

Container: Containers are similar to Virtual Machine; they are small and have relaxed isolation properties for sharing OS among the applications. They are lightweight and are a good way to bundle and run your applications. Containers have their own file system, CPU, memory, process space and many other things similar to Virtual Machine. As they are detached from the underlying infrastructure, they are portable across clouds and OS distributions.

Containers have transformed the way people think about developing, deploying and maintaining software. It offers separation with less overhead and greater flexibility. In containerized architecture, different services are packed into separate containers and deployed across a cluster of physical or virtual machines. Containers can be accessed with the help of an external IP address. Docker is one of the best containerization platforms.

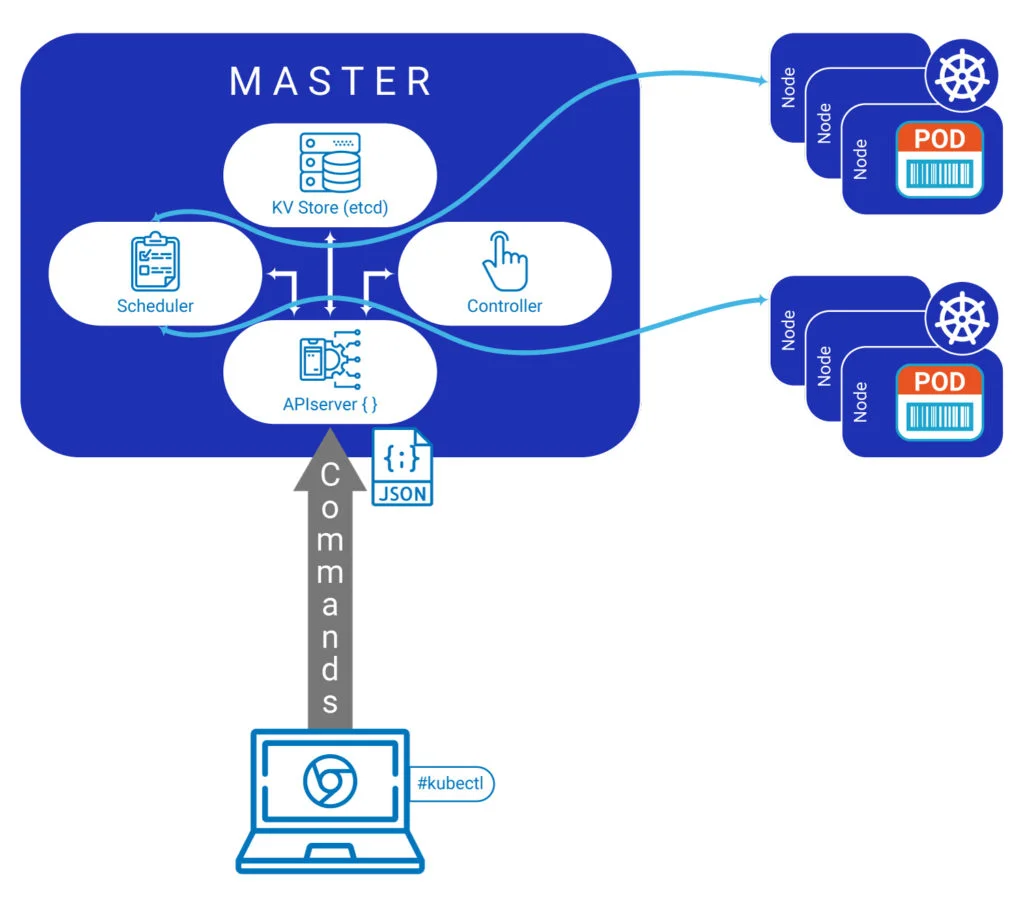

Master: Kubernetes Master controls Kubernetes nodes. It is a control panel for the cluster. All task assignments originate in Master.

Node: Kubernetes Node is a machine where containers are deployed. The node performs the requested, assigned tasks and master controls them. You can create a node with the help of OS like OpenStack or Amazon EC2. Before you use Kubernetes to deploy your applications, you need to lay down your basic infrastructure.

Every cluster (aggregate of nodes) comprises of Kubernetes nodes (Cluster refers to the entire running system). A node may be a Virtual Machine or Physical Machine.

Pods: Pods are the most basic unit of Kubernetes cluster, which can be created or managed. Each pod represents a single instance of application or running process in Kubernetes. Pods contain one or more containers and share the same IP address, IPC, hostname, and other resources.

Pods let you move the cluster easily by abstracting the network and storage from the underlying container. Pods are short-lived and can be created or destroyed according to requirement.

Services: Service defines rules about how a group of pods can be accessed via a network. Services decouple work definitions from the pods, i.e. if the back end of an application is changed, the front end won’t have any idea about it.

Kubelet: Kubelet supervises the state of a pod. It ensures that all the defined containers are up and healthy. It starts, stops and manages containers organized into pods as directed by the control plane. It alerts the Replication Controller about node failure, on which the controller launches pods on other healthy nodes. It also communicates with data storage and gets information about services.

Kube-proxy: A network proxy and load balancer implementation. Kube-proxy supports service abstraction with other networking operation, responsible for traffic routing based on IP and port number of the incoming request.

Kubectl: This is the command-line configuration tool for users to communicate with Kubernetes API server.

How Kubernetes Works? What can you do with Kubernetes?

As we all know, Kubernetes is used for Container Orchestration. Kubernetes helps you to manage the containers and ensures that there is minimum downtime. Kubernetes automates the whole system and provides you a framework to run distributed systems.

Container Management consists of the arrangement, management, and coordination of containers in their clusters. It controls deployment, scaling and replication and many more. Kubernetes is an orchestration tool which allows management of micro-services.

Kubernetes can work with any container system that follows the Open Container Initiative (OCI) standards. The most famous one is Docker.

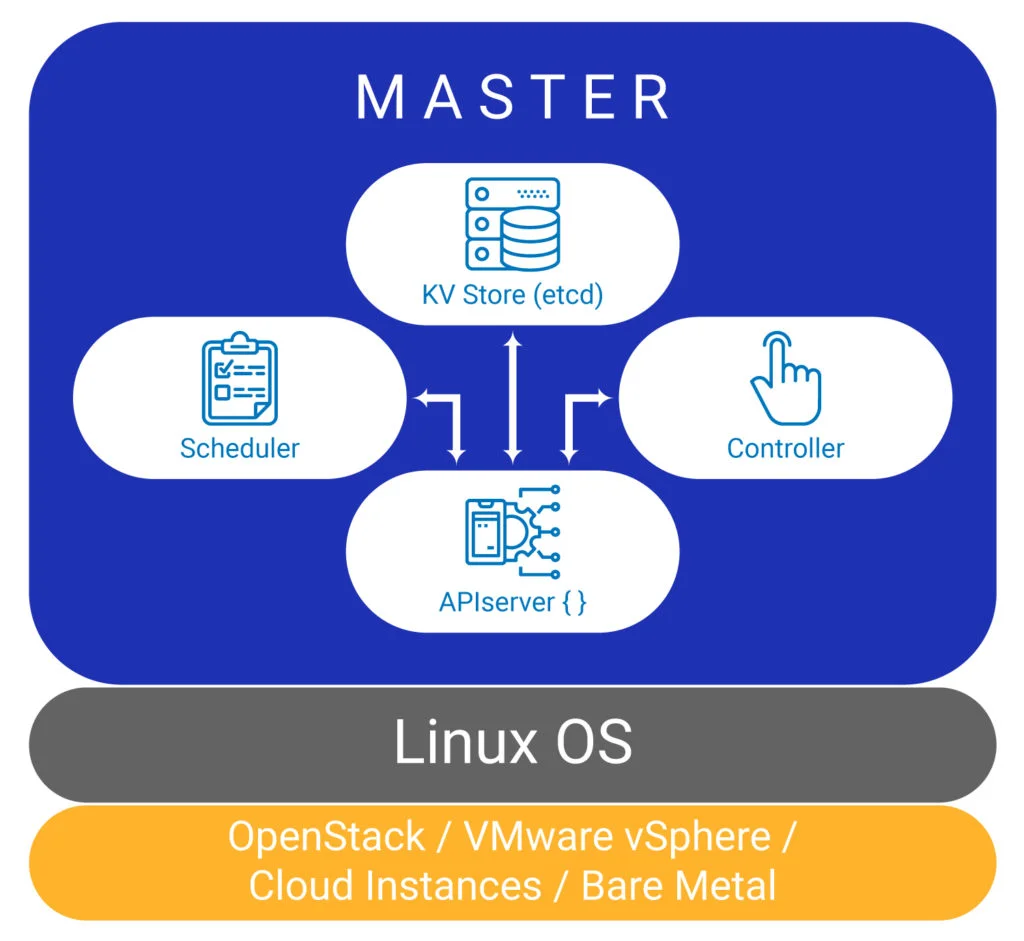

Kubernetes Architecture mainly consists of two components: Master Node and Worker Nodes. Both have three main components each.

Master Node

Master Node works like a Manager who manages the whole team of nodes. Master controls which node works on which request. Master Node runs only on Linux but is not specific to a particular platform.

The main components of Master Nodes are:

Kube-apiserver

Kube-apiserver is the front end of the control plane of the master node. It provides an interface to communicate via REST API. Using REST API, the server allows different tools and libraries to readily communicate with it. The API makes sure that there is an established communication between the Master and Worker Nodes.

Kube-controller-manager

Kube-controller-manager does justice to its name. It manages the controllers, creates a namespace and collects the garbage. The control manager oversees various controllers and makes sure to keep the system running if any node goes down. It allows Kubernetes to interact with providers with different capabilities, features, and APIs.

This allows Kubernetes to adjust resources and use additional services required to satisfy the work requirements of the clusters.

The main responsibility of kube-controller-manager is to communicate with the API server to manage the end-points. It controls the following controllers:

Node Controller: It manages the node. It creates, updates and deletes the node as per requirement.

Replication Controller: It handles the number of pods as per the requirement.

Service Account and Token Controller: It creates API tokens and default accounts for new namespaces.

Endpoints Controller: It controls and takes care of endpoint objects such as services and nods.

Kube-scheduler

The scheduling of workloads to specific nods in the cluster is handled by the scheduler. It is responsible for tracking available capacity on each host to make sure that workloads are at par with the available resources.

The scheduler keeps looking for new pods required. It distributes the workload to the worker node. This service reads the workload’s requirement, analyzes the current infrastructure and ensures that a worker’s node performance is within an appropriate threshold.

The scheduler takes logical decision based on the pod’s requirement and existing worker node’s load.

etcd

All configuration information about cluster states is stored in the etcd in the form of key pairs to share information about the overall state of the cluster. These states show that nodes are included in cluster and pods that are needed to be running in it. Nodes can refer to global configuration data stored there to set themselves whenever they are regenerated.

Worker Nodes

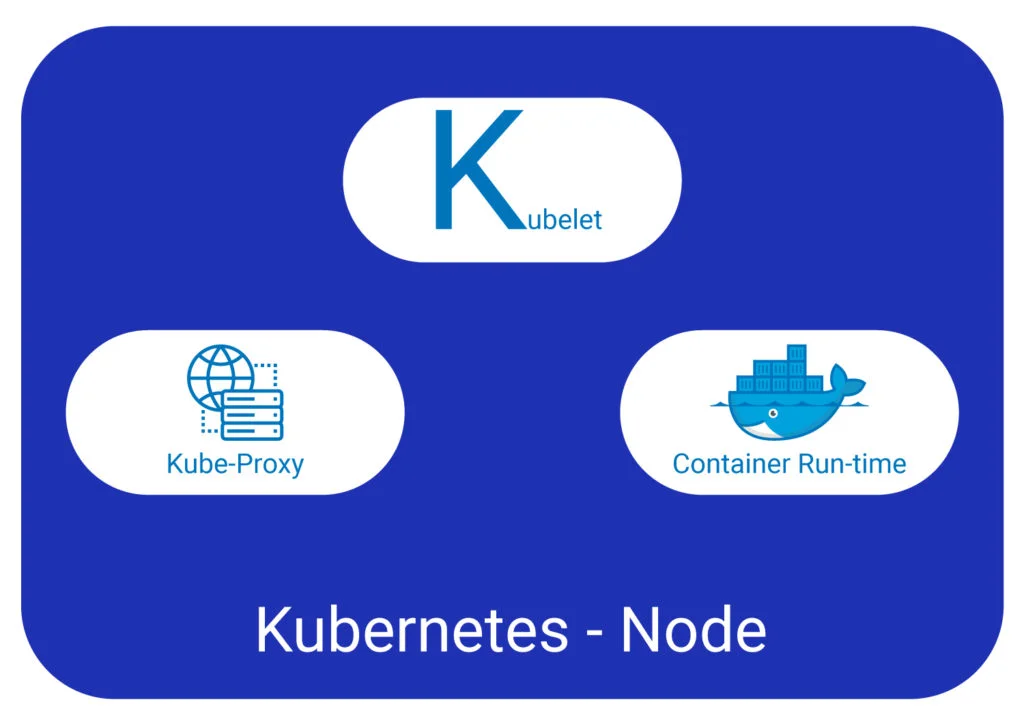

Worker Nodes have components required for communicating with the Master Nodes. They are simple as compared to the Master Node. They follow the instructions of the master as drones. In short, the Node provides all necessary services to run pods on it. The main components are:

Kubelet

The main point of contact in a cluster is Kubelet. It is a small service for transferring information from and to the control plane services, as well as interacting with etcd store to read configuration details or write new values.

It communicates with the master components to authenticate cluster and receive work in the form of manifest defining workload and operating parameters. It checks the health of the containers in a pod and ensures that volume is mounted as per manifest. It links the node to the API server.

Kube-proxy

The Kube Proxy is the brain of the node which handles the network. To manage the host subnetting and make services available to other components, kube-proxy is run on each node. It ensures that each pod gets a unique IP and if all containers share the same pod, then they all will share the same IP.

The kube-proxy transfers the traffic coming into a node for work to correct containers. This process can do primitive load balancing and is making sure that the networking environment is appropriate.

Container Runtime

Every node must have container runtime. It is responsible for managing and starting containers in a lightweight operating system. To deploy a container, you need container runtime software. In most of the cases, Docker is used. Although, alternatives like rkt and runc are also there. Each unit of work on the cluster is implemented as one or more containers that must be deployed. The container runtime on each node runs the containers defined in the workloads. It starts or stops the container on the pods.

Features of Kubernetes

Self-Monitoring: Kubernetes checks the health of the nodes and containers constantly. It takes care of the node downtime and helps to avoid any downfall.

Storage Orchestration: Kubernetes helps you to customize storage space and mount it to run apps.

Horizontal Scaling: Kubernetes allows horizontal scaling of resources, along with vertical scaling quickly and easily. Auto Scaling automatically changes the number of running containers based on the utilization of CPU.

Automates rollouts and rollbacks: If something goes wrong, after making a change in the application, Kubernetes will rollback for you.

Compatibility: Kubernetes is an open-source tool, which gives you the freedom to move workloads anywhere you want, be it on-premise, hybrid or cloud infrastructure.

Automation: Kubernetes automates the whole process from scaling to deploying etc.

Rolling Update: Rolling Update allows you to update the containerized application with minimum downtime by using different techniques. The normal way to update such an application is to provide new images for its containers. Instead, Rolling Update ensures that only a number of replicas may be down while they are being updated and also it ensures that a certain number of new replicas may be created above specified number.

Kubernetes allows replication controllers and deployments using rolling updates. Kubernetes Deployment creates new resources or replaces the existing resources, whenever required.

Load Balancing: Load Balancing works in sync with container replication, scaling and service discovery. It is a dedicated service which knows how many replicas are running and provides an endpoint that is exposed to clients. An external IP address is forwarded to the port on a node which is then forwarded to another port on a pod is passed to the client for accessing the service.